A Python file that relies only on the standard library can be redistributed and reused without the need to use setuptools. But for projects that consist of multiple files, need additional libraries, or need a specific version of Python, setuptools will be required. This command will install the package to your system. The -e option stands for editable, which is important because it allows you to change the source code of your package without reinstalling it.

This being done on windows 10 professional without admin rights ( hence embedded method of using python 3.5 ). Able pip install openpyxl without issue when running same command ( python -m pip install pyautogui ) described error message , traceback found above. I've done clean extractions of zip , found same problem. Introduce a new way to set config options via ini-style files, by default setup.cfg and tox.ini files are searched. The old ways (certain environment variables, dynamic conftest.py reading is removed).

Dataclasses are python classes but are suited for storing data objects. This module provides a decorator and functions for automatically adding generated special methods such as __init__() and __repr__() to user-defined classes. Setuptools.Command now supports reinitializing commands using keyword arguments to set/reset options. Also, Command subclasses can now set their command_consumes_arguments attribute to True in order to receive an args option containing the rest of the command line.

The egg_info command now adds a top_level.txt file to the metadata directory that lists all top-level modules and packages in the distribution. This is used by the easy_install command to find possibly-conflicting "unmanaged" packages when installing the distribution. When you run ez_setup.py to install setuptools into a Python environment where setuptools are not already installed - everything works great.

I have been following all the documentation and this forum posts but not helpful. Added support for parallel reading of source files with thesphinx-build -j option. Third-party extensions will need to be checked for compatibility and may need to be adapted if they store information in the build environment object. As with most parts of Python, the import system can be customized. Just like regular modules and packages, namespace packages must be found on the Python import path.

If you were following along with the previous examples, then you might have had issues with Python not finding serializers. In actual code, you would have used pip to install the third-party library, so it would be in your path automatically. Python modules and packages are very closely related to files and directories. This sets Python apart from many other programming languages in which packages merely act as namespaces without enforcing how the source code is organized. See the discussion in PEP 402 for examples. Thanks to Pavel Savchenko for bringing the problem to attention in #821 and Bruno Oliveira for the PR.

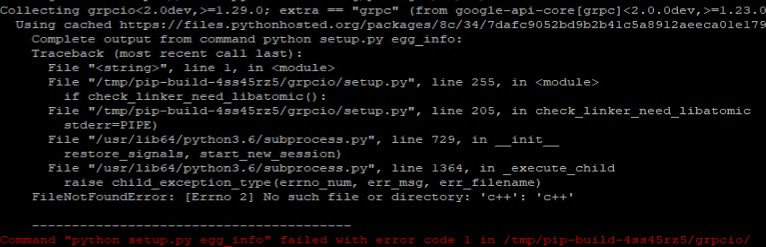

When the command is run, all of the dependencies not already installed will be downloaded, built , and installed. Any scripts that require specific dependencies at runtime will be installed with wrappers that ensure the correct versions are added to sys.path . The current builds of Python for Windows use the MSVC 14.X libraries which are covered under the Microsoft Visual Studio licenses. This means that any extension modules that are generated also fall under the same Microsoft licenses. There are 37 items on my sys.path at the moment.

Profiling import pkg_resources shows that this leads to 76 calls to workingset.add_entry , of which most of the time is spent in 466 calls to Distribution.from_location. Sphinx-build now provides more specific error messages when called with invalid options or arguments. This is a great optimization, but it's also a necessity. Introduce a pytest_cmdline_processargs hook to allow dynamic computation of command line arguments. This fixes a regression because py.test prior to 2.0 allowed to set command line options from conftest.py files which so far pytest-2.0 only allowed from ini-files now.

Simplified and fixed implementation for calling finalizers when parametrized fixtures or function arguments are involved. Finalization is now performed lazily at setup time instead of in the "teardown phase". While this might sound odd at first, it helps to ensure that we are correctly handling setup/teardown even in complex code. User-level code should not be affected unless it's implementing the pytest_runtest_teardown hook and expecting certain fixture instances are torn down within . See issue #2959for details and a recommended workaround.

These environment variables cause Python to load files from locations other than the standard ones. Conda was intended as a user space tool, but often users need to use it in a global environment. One place this can go awry is with restrictive file permissions.

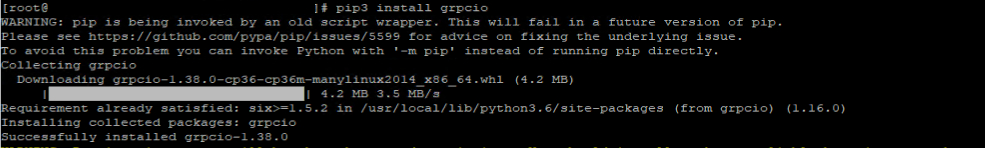

Conda creates links when you install files that have to be read by others on the system. As indicated in the above logs, notice that the system is using an earlier version of pip , which is not compatible with the oslo wheel file. Notice also that the installation is using Python 2.7 (/usr/lib/python2.7/site-packages/pkg_resources.py) and not 3.x. Made easy_install a standard setuptools command, moving it from the easy_install module to setuptools.command.easy_install. Note that if you were importing or extending it, you must now change your imports accordingly.

Easy_install.py is still installed as a script, but not as a module. Fix test command possibly failing if an older version of the project being tested was installed on sys.path ahead of the test source directory. Questions, comments, and bug reports should be directed to the distutils-sig mailing list. If you have working,tested patches to correct problems or add features, you may submit them to the setuptools bug tracker. If I run pip install --three jupyter, it removes the existing Python3 environment, creates a new Python3 environment and then I get the same error message again. This prevents Windows Python 3 from accessing the Unicode command line arguments which are sent by Windows when running python scripts via the usual python.exe command.

When run through launchers, the sys.argv contains instead replacement strings like "?????", which is not nice. On a source directory that already has a dist, the existing directory structure is used when preparing the wheel. This causes an exception if an existing wheel with a different name exists, because build_meta assumes there's only one wheel in dist.

Anything that ends execution of the block causes the context manager's exit method to be called. This includes exceptions, and can be useful when an error causes you to prematurely exit from an open file or connection. Exiting a script without properly closing files/connections is a bad idea, that may cause data loss or other problems.

By using a context manager you can ensure that precautions are always taken to prevent damage or loss in this way. Script that autogenerates a hierarchy of source files containing autodoc directives to document modules and packages. The CSV data is loaded by .exec_module(). You can use csv.DictReader from the standard library to do the actual parsing of the file.

Like most things in Python, modules are backed by dictionaries. By adding the CSV data to module.__dict__, you make it available as attributes of the module. Compared to the finders you saw earlier, this one is slightly more complicated.

By putting this finder last in the list of finders, you know that if you call PipFinder, then the module won't be found on your system. The job of .find_spec() is therefore just to do the pip install. If the installation works, then the module spec will be created and returned.

Remember, when distributing your package, you're not even guaranteed that resource files will exist as physical files on the file system. Importlib.resources solves this by providing path(). This function will return a path to the resource file, creating a temporary file if necessary. You won't have control over the path to the resource since that will depend on your user's setup as well as on how the package is distributed and installed.

You can try to figure out the resource path based on your package's __file__ or __path__ attributes, but this may not always work as expected. For example, someone might want to import the script into a Jupyter Notebook and run it from there. Or they may want to reuse the files library in another project. They may even create an executable with PyInstaller to more easily distribute it. Unfortunately, any of these scenarios can create issues with the import of files.

How does Python find the modules and packages it imports? You'll see more details about the mechanics of the Python import system later. For now, just know that Python looks for modules and packages in its import path. This is a list of locations that are searched for modules to import.

It's fairly common to import subpackages and submodules in an __init__.py file to make them more readily available to your users. You can see one example of this in the popular requests package. Fix issue99 internallerrors with resultlog now produce better output - fixed by normalizing pytest_internalerror input arguments. If you're packaging a Python package for publication, how do you ensure that all of the required dependencies are included? Just like pip is the standard package manager for Python, setup.py is the heart and center of Python projects installed with pip. Simply put, setup.py is a build script template distributed with Python's setuptools package.

Directory instead of directly in the current directory. This choice of location means the files can be readily managed . Additionally, later phases or invocations of setuptools will not detect the package as already installed and ignore it for permanent install (See #209). Feature of thepydoc module which could be abused to read arbitrary files on the disk . Moreover, even source code of Python modules can contain sensitive data like passwords. Vulnerability reported by David Schwörer.

PyPI packages can also be searched for using Artifactory's Property Search. All PyPI packages have the properties pypi.name, pypi.version and pypi.summary set by the uploading client, or later during indexing for supported file types. Where more than one source of the chosen version is available, it is assumed that any source is acceptable . As a result, when pip imports setuptools before building legacy packages, those distutils-only packages will continue to get the setuptools treatment. This obviously affects all of the python scripts that are installed as console entry points, because each and every one of them starts with a line like that. In code which does not rely on entry points this may be a problem whenever I want to use resource_filename to consistently access static data.

The setup script is the centre of all activity in building, distributing, and installing modules using the Distutils. While Python's context managers are widely used, few understand the purpose behind their use. There are two main modules in Python that deals with path manipulation. One is the os.path module and the other is the pathlib module. The pathlib module was added in Python 3.4, offering an object-oriented way to handle file system paths.

Currently, there is no way to specify WT as a dependancy for python. User has to build WT manually, instead of setuptools doing this job. IPython ships with a basic system for running scripts interactively in sections, useful when presenting code to audiences. The interactive namespace is updated after each block is run with the contents of the demo's namespace.

At any time, hitting TAB will complete any available python commands or variable names, and show you a list of the possible completions if there's no unambiguous one. It will also complete filenames in the current directory if no python names match what you've typed so far. This behavior is different from standard Python, which when called as python -i will only execute one file and ignore your configuration setup.

One concern when importing several modules and packages is that it will add to the startup time of your script. Depending on your application, this may or may not be critical. Still, there are times when it's reasonable to introduce an import cycle. As you saw above, this isn't a problem so long as your modules define only attributes, functions, classes, and so on.

The second tip—which is also good design practice—is to keep your modules free of side effects at import time. For top-level imports, path will be None. In that case, you look for the CSV file in the full import path, which will include the current working directory. If you're importing a CSV file within a package, then path will be set to the path or paths of the package.

If you find a matching CSV file, then a module spec is returned. This module spec tells Python to load the module using CsvImporter. You saw earlier that creating modules with the same name as standard libraries can create problems.

For example, if you have a file named math.py in Python's import path, then you won't be able to import math from the standard library. Reading the data from disk takes some time. Since you don't expect the data file to change, you instantiate the class when you load the module. The name of the class starts with an underscore to indicate to users that they shouldn't use it.